42 labelbinarizer vs onehotencoder

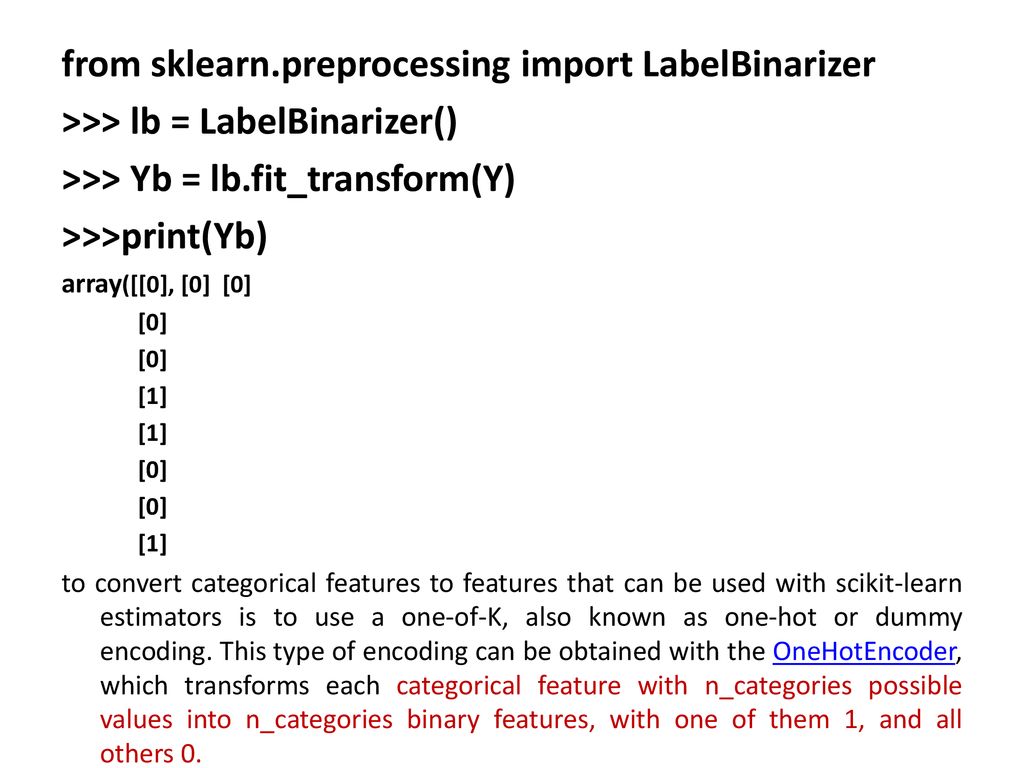

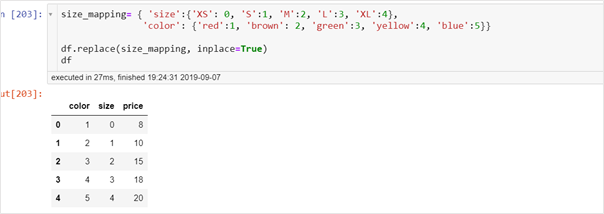

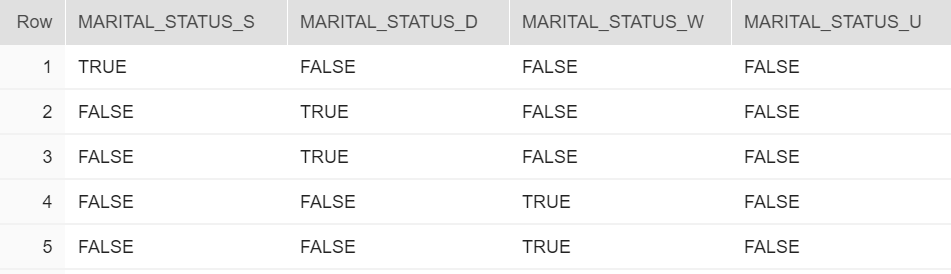

Scikit-learn's LabelBinarizer vs. OneHotEncoder - Intellipaat Label Binarizer: It assigns a unique value or number to each label in a categorical feature. ... One Hot Encoding: It encodes categorical integer ... Difference between LabelEncoder and LabelBinarizer? labelEncoder does not create dummy variable for each category in your X whereas LabelBinarizer does that. Here is an example from documentation.

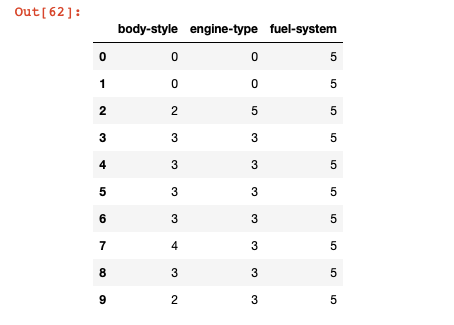

【Python】scikit-learnのSVMで文字列データを使用する - Qiita Scikit-learn's LabelBinarizer vs. OneHotEncoder - stackoverflow. OrdinalEncoder. LabelEncoderと同じようにアルファベットの昇順に数値が割り振られます。違いとしてはOneHotEncoderと同様に2次元配列で渡します。 pandasのDataFrameを使用する上では2次元配列の方が都合がいい。

Labelbinarizer vs onehotencoder

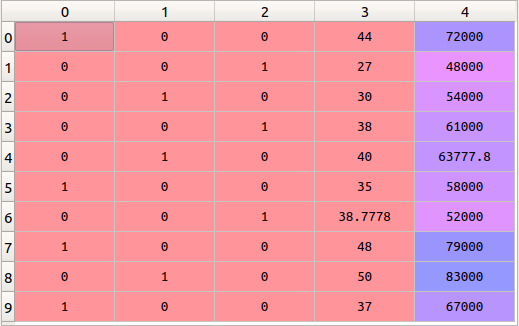

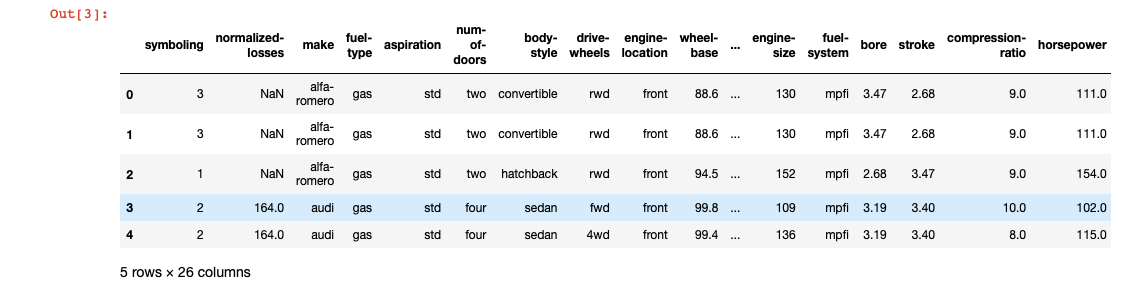

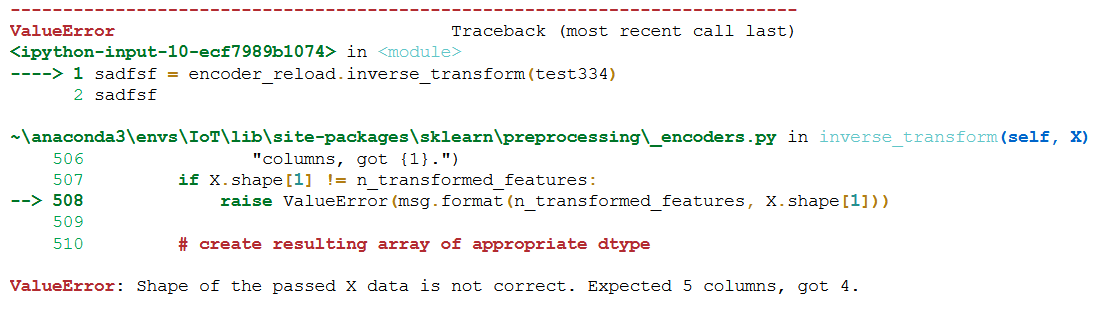

Encoding Categorical data in Machine Learning - Medium # Encoding drive-wheels and engine-location columns using ColumnTransformer and OneHotEncoder from sklearn.compose import ColumnTransformer from sklearn.preprocessing import OneHotEncoder ... Scikit-learn's LabelBinarizer vs. OneHotEncoder A simple example which encodes an array using LabelEncoder, OneHotEncoder, LabelBinarizer is shown below. I see that OneHotEncoder needs data in integer en… Labelbinarizer inverse transform - wunc.sundrygods.shop LabelBinarizer LabelEncoderでは文字列を数値に直したけど、こうした値はたいてい1つの要素としてではなく複数の要素として扱われる。 例えば[tokyo, osaka, nagoya]という3つの値が. LabelBinarizer makes this process easy with the transform method. At prediction time, one assigns the class for which the corresponding model gave the greatest ...

Labelbinarizer vs onehotencoder. preprocessing.LabelBinarizer() - Scikit-learn - W3cubDocs class sklearn.preprocessing.LabelBinarizer (neg_label=0, pos_label=1, sparse_output=False) [source] Binarize labels in a one-vs-all fashion. Several regression and binary classification algorithms are available in scikit-learn. A simple way to extend these algorithms to the multi-class classification case is to use the so-called one-vs-all scheme. Scikit-learn's LabelBinarizer vs. OneHotEncoder - Stack Overflow The results of OneHotEncoder () and LabelBinarizer () are almost similar [there might be differences in the default output type. However, to the best of my understanding, LabelBinarizer () should ideally be used for response variables and OneHotEncoder () should be used for feature variables. python - Scikit-learn's LabelBinarizer vs. OneHotEncoder A simple example which encodes an array using LabelEncoder, OneHotEncoder, LabelBinarizer is shown below. I see that OneHotEncoder needs ... Deciding between get_dummies and LabelEncoder for categorical variables ... Looking at your problem , get_dummies is the option to go with as it would give equal weightage to the categorical variables. LabelEncoder is used when the categorical variables are ordinal i.e. if you are converting severity or ranking, then LabelEncoding "High" as 2 and "low" as 1 would make sense.

Python LabelBinarizer.inverse_transform Examples Python LabelBinarizer.inverse_transform - 30 examples found. These are the top rated real world Python examples of sklearnpreprocessing.LabelBinarizer.inverse_transform extracted from open source projects. ... """OneHotEncoder that can handle categorical variables.""" def __init__(self): """Convert labeled categories into one-hot encoded ... Python Examples of sklearn.preprocessing.OneHotEncoder - ProgramCreek.com The following are 30 code examples of sklearn.preprocessing.OneHotEncoder().You can vote up the ones you like or vote down the ones you don't like, and go to the original project or source file by following the links above each example. 【Python学习】sklearn.preprocessing.LabelBinarizer() Mar 13, 2018 · LabelEncoder、LabelBinarizer、OneHotEncoder三者的区别 import numpy as np from sklearn.preprocessing import LabelEncoder, LabelBinarizer, ... Python Examples of sklearn.preprocessing.LabelBinarizer - ProgramCreek.com The following are 30 code examples of sklearn.preprocessing.LabelBinarizer(). You can vote up the ones you like or vote down the ones you don't like, and go to the original project or source file by following the links above each example. ... (df.preprocessing.OneHotEncoder, pp.OneHotEncoder) self.assertIs(df.preprocessing.PolynomialFeatures ...

Scikit-learns LabelBinarizer vs. OneHotEncoder - anycodings A simple example which encodes an array anycodings_scikit-learn using LabelEncoder, OneHotEncoder, anycodings_scikit-learn LabelBinarizer is ... Version 1.1.2 — scikit-learn 1.1.2 documentation Feature preprocessing.OneHotEncoder now supports grouping infrequent categories into a single feature. Grouping infrequent categories is enabled by specifying how to select infrequent categories with min_frequency or max_categories. #16018 by Thomas Fan. Ordinal Encoder, OneHotEncoder and LabelBinarizer in Python - Suresh C One major difference of LabelBinarizer vs OneHotEncoder is LabelBinarizer works on only 1 dimensional array. If you provide 2 dimensional array to LabelBinarizer, it throws error. So I have defined a 1-d array and use this encoder to transform the data. labelArray = np.array ( [ ['Male'], ['Female'], ['Unknown']]) binarizer = LabelBinarizer () When to use One Hot Encoding vs LabelEncoder vs DictVectorizor? One-Hot-Encoding has the advantage that the result is binary rather than ordinal and that everything sits in an orthogonal vector space. The disadvantage is that for high cardinality, the feature space can really blow up quickly and you start fighting with the curse of dimensionality.

Scikit-learn's LabelBinarizer vs. OneHotEncoder 29 May 2022 — Answer #2: A difference is that you can use OneHotEncoder for multi column data, while not for LabelBinarizer and LabelEncoder .

API Reference — scikit-learn 1.1.2 documentation The one-vs-the-rest meta-classifier also implements a predict_proba method, so long as such a method is implemented by the base classifier. This method returns probabilities of class membership in both the single label and multilabel case.

msmbuilder.preprocessing.LabelBinarizer LabelBinarizer (neg_label=0, pos_label=1, sparse_output=False) ¶ Binarize labels in a one-vs-all fashion. Several regression and binary classification algorithms are available in the scikit. A simple way to extend these algorithms to the multi-class classification case is to use the so-called one-vs-all scheme.

sklearn.preprocessing.LabelBinarizer — scikit-learn 1.1.2 ... Binarize labels in a one-vs-all fashion. Several regression and binary classification algorithms are available in scikit-learn. A simple way to extend these algorithms to the multi-class classification case is to use the so-called one-vs-all scheme. At learning time, this simply consists in learning one regressor or binary classifier per class.

Python One Hot Encoding with SciKit Learn - HackDeploy Use the LabelBinarizer Scikit learn class; Generate Test Data. In order to get started, let's first generate a test data frame that we can play with. ... First, initialize the OneHotEncoder class to transform the color feature. The fit_transform method expects a 2D array, reshape to transform from 1D to a 2D array. ...

Label_binarize vs LabelBinarizer vs Onehotencoder - Kaggle But there is also another method using sklearn.preprocessing.LabelBinarizer and it does the same work by getting some extra parameters. Both are returning a sparse matrix. Both are returning a sparse matrix.

Scikit-learn's LabelBinarizer vs. OneHotEncoder - Blogmepost It encodes categorical integer features as a one-hot numeric array. It makes model training easier and faster. For example: from sklearn.preprocessing import OneHotEncoder enc = OneHotEncoder (handle_unknown='ignore') X = [ ['Male', 1], ['Female', 3], ['Female', 2]] enc.fit (X) Hope this answer helps.

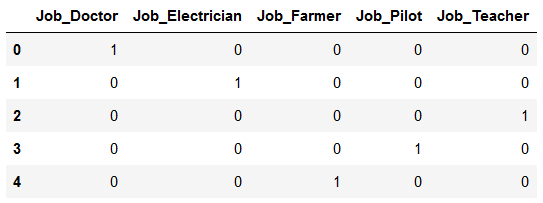

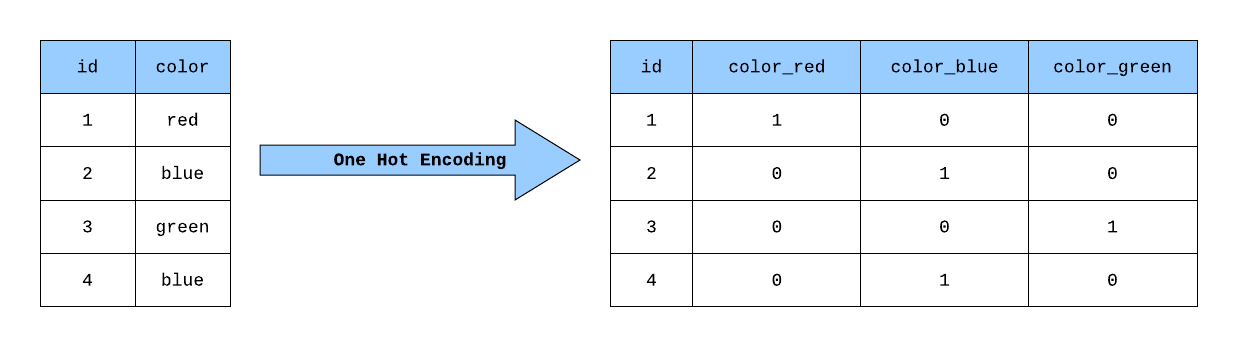

Label Encoder vs. One Hot Encoder in Machine Learning What one hot encoding does is, it takes a column which has categorical data, which has been label encoded, and then splits the column into multiple columns. The numbers are replaced by 1s and 0s,...

Categorical Data Encoding with Sklearn LabelEncoder and OneHotEncoder ... As we discussed in the label encoding vs one hot encoding section above, we can clearly see the same shortcomings of label encoding in the above examples as well. With label encoding, the model had a mere accuracy of 66.8% but with one hot encoding, the accuracy of the model shot up by 22% to 86.74% Conclusion

preprocessing.OneHotEncoder() - Scikit-learn - W3cubDocs The OneHotEncoder previously assumed that the input features take on values in the range [0, max (values)). This behaviour is deprecated. This encoding is needed for feeding categorical data to many scikit-learn estimators, notably linear models and SVMs with the standard kernels.

Label Encoder vs. One Hot Encoder dalam Machine Learning - ICHI.PRO Jika Anda baru mengenal Machine Learning, Anda mungkin bingung antara keduanya - Label Encoder dan One Hot Encoder. Kedua pembuat enkode ini adalah bagian dari pustaka SciKit Learn dengan Python, dan keduanya digunakan untuk mengonversi data kategorikal, atau data teks, menjadi angka, yang dapat lebih dipahami oleh model prediktif kami.

One-Hot Encoding in Python with Pandas and Scikit-Learn - Stack Abuse We can see from the tables above that more digits are needed in one-hot representation compared to Binary or Gray code. For n digits, one-hot encoding can only represent n values, while Binary or Gray encoding can represent 2n values using n digits. Implementation Pandas

OneHotEncoding vs LabelEncoder vs pandas getdummies — How and Why? | by ... OneHotEncoder needs data in integer encoded form first to convert into its respective encoding which is not required in the case of LabelBinarizer. Scikitlearn suggests using OneHotEncoder for X...

标签二值化LabelBinarizer_最小森林的博客-CSDN博客_labelbinarizer Aug 30, 2017 · 对于标称型数据来说,preprocessing.LabelBinarizer是一个很好用的工具。 比如可以把yes和no转化为0和1,或是把incident和normal转化为0和1。 当然,对于两类以上的标签也是适用的。

Use ColumnTransformer in SciKit instead of LabelEncoding and ... For this, we'll still need the OneHotEncoder library to be imported in our code. But instead of the LabelEncoder library, we'll use the new ColumnTransformer. So let's import these two first: from sklearn.preprocessing import OneHotEncoder from sklearn.compose import ColumnTransformer

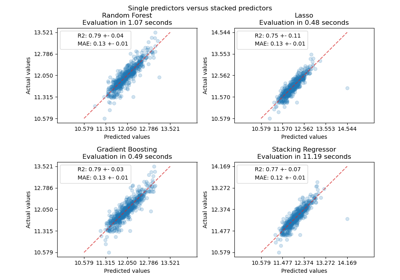

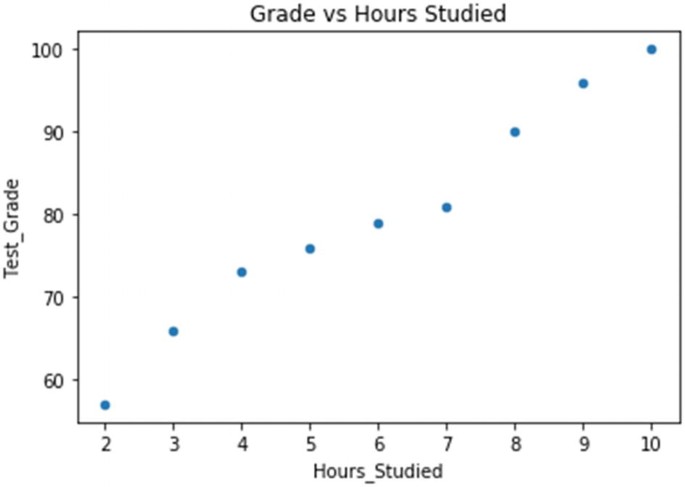

Choosing the right Encoding method-Label vs OneHot Encoder RMSE of One Hot Encoder is less than Label Encoder which means using One Hot encoder has given better accuracy as we know closer the RMSE to 0 better the accuracy, again don't be worried for such a large RMSE as I said this is just a sample data which has helped us to understand the impact of Label and OneHot encoder on our model.

Multilabelbinarizer, how does it actually work ? | Data ... - Kaggle Multilabelbinarizer allows you to encode multiple labels per instance. To translate the resulting array, you could build a DataFrame with this array and the encoded classes (through its "classes_" attribute). binarizer = MultiLabelBinarizer () pd.DataFrame (binarizer.fit_transform (y), columns=binarizer.classes_) Hope this helps!

Target Encoding Vs. One-hot Encoding with Simple Examples One-hot encoding can create very high dimensionality depending on the number of categorical features you have and the number of categories per feature. This can become problematic not only in...

sklearn.preprocessing.OneHotEncoder — scikit-learn 1.1.2 ... Performs an approximate one-hot encoding of dictionary items or strings. LabelBinarizer Binarizes labels in a one-vs-all fashion. MultiLabelBinarizer Transforms between iterable of iterables and a multilabel format, e.g. a (samples x classes) binary matrix indicating the presence of a class label. Examples

3 Ways to Encode Categorical Variables for Deep Learning The scikit-learn library provides the OneHotEncoder to automatically one hot encode one or more variables. The prepare_inputs() function below provides a drop-in replacement function for the example in the previous section. Instead of using an OrdinalEncoder, it uses a OneHotEncoder.

One Hot Encoding in Scikit-Learn - ritchieng.github.io Don't use LabelEncoder to transform categorical into numerical, just use OneHotEncoder. Using LabelEncoder introduces a problem of data ordering, when your categorical data gets encoded into let's say 1,2,3 etc. and for model this is a clear ordering 1 < 2 < 3, however in reality with categorical features this is not true.

Labelbinarizer inverse transform - wunc.sundrygods.shop LabelBinarizer LabelEncoderでは文字列を数値に直したけど、こうした値はたいてい1つの要素としてではなく複数の要素として扱われる。 例えば[tokyo, osaka, nagoya]という3つの値が. LabelBinarizer makes this process easy with the transform method. At prediction time, one assigns the class for which the corresponding model gave the greatest ...

Scikit-learn's LabelBinarizer vs. OneHotEncoder A simple example which encodes an array using LabelEncoder, OneHotEncoder, LabelBinarizer is shown below. I see that OneHotEncoder needs data in integer en…

Encoding Categorical data in Machine Learning - Medium # Encoding drive-wheels and engine-location columns using ColumnTransformer and OneHotEncoder from sklearn.compose import ColumnTransformer from sklearn.preprocessing import OneHotEncoder ...

Post a Comment for "42 labelbinarizer vs onehotencoder"